WARNING! The file

SCG/monitor-plot.html must not be edited with an html-editor

It contains markup that must stay in the lines as is. See

documentation in the local file ~hgs/Manuals/SCG-monitor.txt

on holt.

On Brimer: Logon VNC as administrator

On Elder: User HGS

cd /home/SGA/bctemp

kx

broadcast-G1 &

cd perlproc/SCG

delgap # follow the instructions incl.

# restarting the agents.

source RESUME

If this does not help, the condition is new and must be investigated.

Without this, a big gap in Holt's monitor-plot.html will persist for one hour

. KXThe xterm windows associated with the broadcast monitor should now disappear.

ps | fgrep xtermIf this results in nothing, go on with reboot.

ps | fgrep bash

ps | fgrep perl

touch STOP STOPSENDand check again with `ps | fgrep perl´ that they have disappeared.

kill -KILL pidstarting with the PID's of perl, then bash, and finally xterm. If the xterms are stuck, don't mind; go ahead with reboot.

. sxStart an xterm terminal from the cygwin console if one didn't come up due to sx:

. stThat'll be your launch window. Go there.

Programs are in /home/SGA/perlproc : rolling.pl and udp-brc-file.pl . Here's how to start them:

tcshmultiple recipients for the daily dataset can be specified, e.g. holt:barre

source minipath

setenv TODAYDEST holt

cd /home/SGA/perlprocIn the other

source start-rolling

cd /home/SGA/perlprocThe predicted tides are stored in ~/perlproc/PT/

source start-broadcast

cd /home/SGA/perlprocif necessary. The following command will kill the persistent xterms by process number:

SCG-accu-agent.pl - Runs the UPD client and computes the tide residual, similar to SCG-html-agent on Holt.Scripts for scp are in ~/bin:

file-monitor-agent.pl - Maintains the integrity of the monitor data, helps Holt to mend gaps.

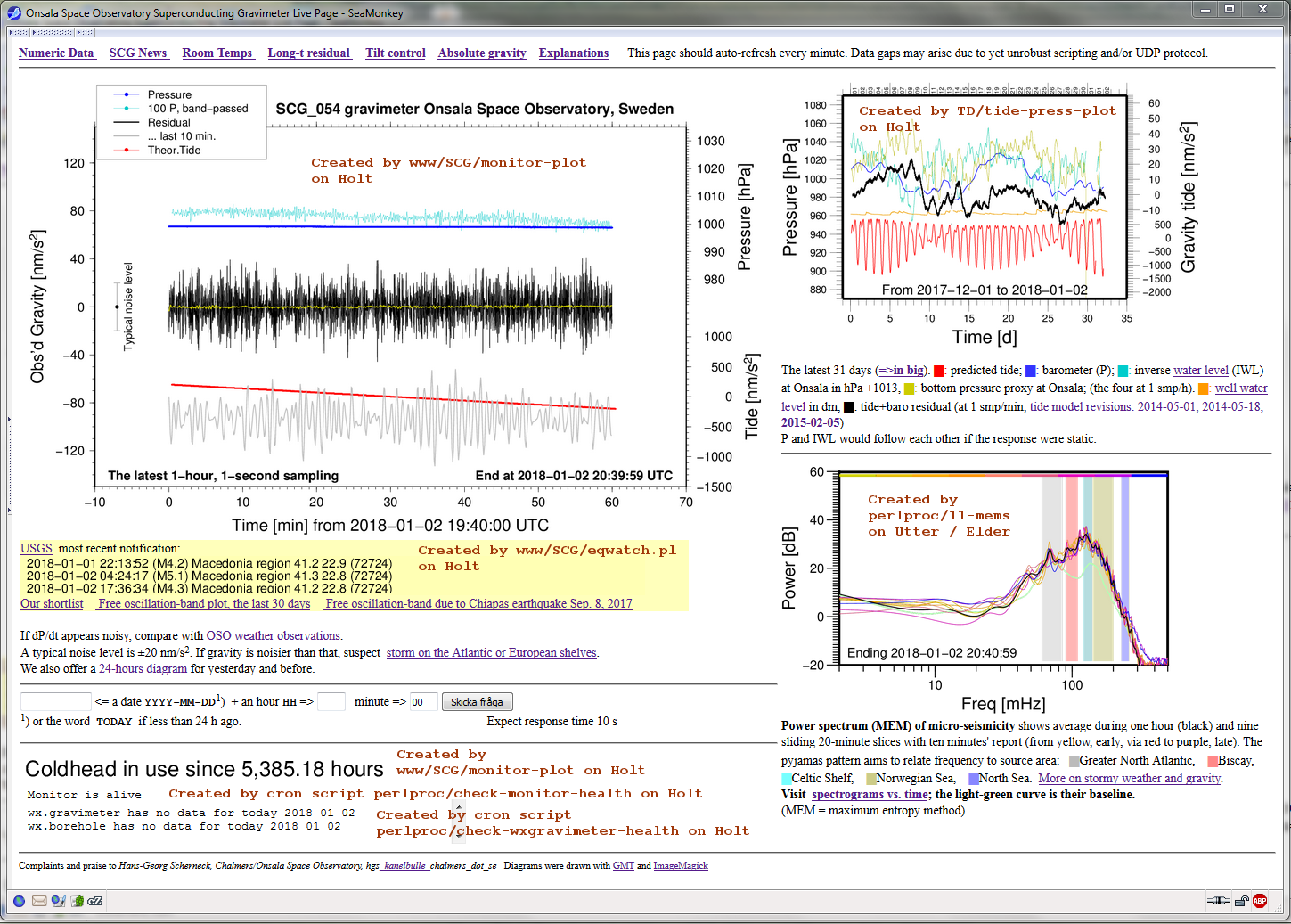

tide-press-plot - Prepares the 30-days' tides and pressure plot (in the upper-right of the monitor)

11-mems - Prepares the MEM-spectra for the last hour (plot in the lower-right of the monitor)

scp2h - scp to HoltThe script actually invoked is set through the environment, e.g.

scp2ba - scp to Barre

scp2LIST - a whole range of recipients

setenv SCPPGM /home/HGS/bin/scp2hwhich is also the default.

Applies to Elder and Utter.Uncontrolled:

Be careful to stop all Cygwin and Windows processes which could uphold rebooting.

Else the computer will stop at the prompt whether the operator agrees to stop them.

Elder console: . sx

Elder console: . st

Elder 3 times: xterm &

in each xterm: tcsh

Elder xterm: source minipath

Elder xterm: holt

Holt xterm: tcsh

Holt xterm...

ps -uhgsYou will probably find orphan processes.

SCG-html-agent - /home/hgs/perlproc/SCG-html-agent.pl - runs the UDP-clientTry to kill the first two using

retro-agent - /home/hgs/let/retro-agent.pl - Serves user requests for plotting in the past.

eqwatch - /home/hgs/perlproc/eqwatch.pl - checks mail inbox for earthquakes

STOP RCV RETand kill eqwatch more efficiently using kill -KILL <pid>

cd ~/perlprocReboot.

STOP RFMT

. sxThe following assumes that you run tcsh, so issue in each xterm

# starts X and an xterm

. st

# starts an additional xterm. Repeat as often as desired. Then you can hide the cygwin console

tcsh

source minipath

# this is necessary to speed up program location; the paths are extended to the software

# you need below. So this command is important!

Starting the jobs at ElderholtIt will open another xterm window. Start tcsh there (there is no minipath since it's a linux machine)

In the window connected to holt (frame title = hgs@holt) issue

tcshand note the active jobs

j

cd ~/perlprocIn the window connected to Elder (frame title = HGS@HGS-Dator) issue

STOP EQW RET

cd ~/perlprocand note the active jobs and their job numbers

j

STOP RFMTany job with process-id (PID) n that does not stop in reasonable time can be stopped by

^C

to kill STOP and then

kill %nDo the rebooting. Start the jobs again at Elder and at Holt.

Start cygwin again. Open a couple of xterm windows with

. sxat the console window.

. st

. st

Use one of the xterms to connect to Holt. The command is simply

holtand start tcsh in the new xterm that will appear.

tcshDone. Continue with Elder as follows

cd perlproc

Connect to elder e.g. by ssh orThe programs may be started in different xterms (tide-press-plot isn't a top figure; it should be replaced

at the very machine, start cygwin and issue

. sxIf it's not to Holt we copy, set the machine in the script /home/HGS/bin/scp2LIST

tcshcheck that path contains ( . ~/perlproc ... ), add if necessary

source minipath

setenv SCPPGM /home/HGS/bin/scp2LIST

echo $path

cd perlproc/SCGTry

sync-monitor

If that does not work,

prune-monitor -T # will preserve the last two hours of monitor.txtIn each its own xterm, start the eternally running programs

# prune-monitor -T -b h-n # will preserve the last n hours

(this was once accomplished with source START, but now you have to it in separate xterms)

source start-SCG-accu-agent.csh

source start-file-monitor-agent.csh

source start-11-mems.csh

setenv SCPPGM noscpbefore source START

source minipathand/or, to synchronize the monitor files on Elder with the more recent ones on Utter,

cd perlproc/SCG

prune-monitor -M -b h-1 # prunes monitor , preserves the last one hour

prune-monitor -T # prunes monitor.txt , preserves the last two hours

prune-monitor -b h-1 -x .dat # prunes monitor.dat , preserves the last two hours

cd perlproc/SCGThe latter method is for a smooth take-over while Utter keeps running.

sync-monitor

source start-SCG-accu-agent.cshAfter issuing the start commands, wait at least ten minutes while you observe that the processes keep running. The buffer files need some new data, and a gap near the restart won't be filled before some time has passed. 11-mems needs 60 minutes before all the curves can be drawn, but ten minutes suffice for drawing the first.

source start-file-monitor-agent.csh

source start-11-mems.csh

-rw-r--r-- 1 HGS None 29364 Apr 23 12:54 monitor.ts

-rw-rw-r-- 1 HGS None 409920 Apr 23 12:54 monitor.txt

-rw-rw-r-- 1 HGS None 416640 Apr 23 12:54 monitor.txt.tmp

-rw-rw-r-- 1 HGS None 6720 Apr 23 12:54 monitor.wrk

-rw-rw-r-- 1 HGS None 19763520 Apr 23 12:18 monitor.dat

-rw-r--r-- 1 HGS None 0 Apr 14 16:04 monitor.tmp - dates from the last restart

etc., older files of no particular interest

set-broker elderNow you can stop the processes on Utter

source minipath

cd perlproc/SCG

STOP RFMT

The reverse swap should work with exchanging Elder <-> Utter

------------------ End Swapping Elder / Utter ------------------------SCG-html-agent - /home/hgs/perlproc/SCG-html-agent.pl - runs the UDP-client, automatically restartedIt's best to start eqwatch and retro-agent from a stationary machine like Elder in an ssh-xterm window to Holt.

from cron /home/hgs/perlproc/monitor-cronstart

every 30 minutes.

tide-press-plot - /home/hgs/TD/new-tpplot P -T RADAR - normally a cron job, but after a long break it

should be executed from the command window with

a reprocessing option. Read the instructions

cd /home/hgs/TD; /home/hgs/TD/new-tpplot -h

pt4brimer - /home/hgs/TD/pt4brimer - computes predicted tides; a cron job.

eqwatch - /home/hgs/perlproc/eqwatch.pl - checks mail inbox for earthquakes.

retro-agent - /home/hgs/let/retro-agent.pl - Serves user requests for plotting in the past.

cd ~/perlprocCombined stop:

source START

STOP RETRO E[QWATCH]The retro-agent is not involved in the real-time processing.

cd ~/TDThe cron job every third day of a month:

foreach i ( `fromto 0 31` )

# prtide4day -XD -D 2013,01,02 -A $i # with drift term

prtide4day -X -D 2013,01,02 -A $i

end

./scp2b -N 32

/home/hgs/TD/pt4brimer

cd ~/let~/let is a directory where SCG-monitor.cgi can place files. The rule with cgi scripts is (or seems to be) that shelling (`sh commands`;) is not allowed (not that simple). An easy solution to starting jobs on requests from a cgi script is to have an agent running. The agent, however, cannot change the file permissions. The owner is www-data, so is the group. The cgi script could check whether the agent has fulfilled the request, and delete the content of the request file. However, it will depend on the surfer's human behaviour whether he/she would follow up a request. It appears more robust if the agent can decide whether the request is unanswered.

retro-agent.pl &

# to stop: touch STOPRETRO

# to test: touch TESTRETRO and perhaps touch ONETRIPRETRO

# While STOPRETRO is removed automatically when retro-agent.pl is started,

# the other signal files must be removed manually.

set today=`echo `date '+%Y-%m-%d'`This could become a croned script to run every morning.

set yester=`jdc -- -A-1 $today`

ls -l /home/hgs/www/SCG/PNG/????-??-??-??-??-monitor.png | awk -v y=$yester '($8 == y){print $9}' | xargs rm -f

# m h dom mon dow command

30 03 * * * /home/HGS/Seisdata/gcf-tar -X -b 1 > /home/HGS/Seisdata/gcf-tar.log 2>&1

00 08 2 * * cd /home/HGS/Tidedata/; ./cp-from-sg -X prev > /home/HGS/Tidedata/cp-from-sg.log 2>&1

# m h dom mon dow command/home/hgs/wx/MAREO/get-oso-mareograph includes data from the Bubble mareograph

30 01 * * * /home/hgs/wx/ARC/get-oso-weather > /home/hgs/wx/ARC/get-oso-weather.log 2>&1

55 01 * * * /home/hgs/wx/MAREO/get-oso-mareograph > /home/hgs/wx/MAREO/get-oso-mareograph.log 2>&1

50 06 * * * /home/hgs/wx/WELL/get-well-level > /home/hgs/wx/WELL/get-well-level.log 2>&1

50 14 * * * /home/hgs/wx/WELL/get-well-level > /home/hgs/wx/WELL/get-well-level.log 2>&1

#56 01 * * * /home/hgs/wx/BUBBLER/get-oso-bubbler -d 1h -S 2 > /home/hgs/wx/BUBBLER/get-oso-bubbler.log 2>&1

40 * * * * /home/hgs/wx/TEMP/get-monutemps > /home/hgs/wx/TEMP/get-monutemps.log 2>&1

41 * * * * /home/hgs/wx/TEMP/plot/plot-monutemps > /home/hgs/wx/TEMP/plot/plot-monutemps.log 2>&1

45 07 * * * /home/hgs/SMHI/getsealevel -g r3g.txt >> /home/hgs/SMHI/crontouch 2>&1

45 19 * * * /home/hgs/SMHI/getsealevel -g r3g.txt >> /home/hgs/SMHI/crontouch 2>&1

25 07 * * * /home/hgs/TD/get-tide-data > /home/hgs/TD/logs/get-tide.log 2>&1

50 07 * * * /home/hgs/TD/tide-press-plot -DD -U > /home/hgs/TD/logs/tide-press-plot.log 2>&1

40 07 * * * /home/hgs/TD/yesterdays-G1-plot > /home/hgs/TD/logs/yesterdays-G1-plot.log 2>&1

05 15 * * * /home/hgs/TD/tide-press-plot -DD -UF > /home/hgs/TD/logs/tide-press-plot.log 2>&1

07 15 * * * /home/hgs/TD/actual-memsp-vs-time > /home/hgs/TD/logs/actual-memsp-vs-time.log 2>&1

45 23 * * * /home/hgs/TD/actual-memsp-vs-time > /home/hgs/TD/logs/actual-memsp-vs-time.log 2>&1

30 06 1 * * /home/hgs/TD/GGP-send-data -F > /home/hgs/TD/logs/GGP-send-data.log 2>&1

00 21 3 * * /home/hgs/TD/pt4brimer > /home/hgs/TD/logs/pt4brimer.log 2>&1

00 22 * * 5 /home/hgs/TD/get-atmacs -w > /home/hgs/TD/logs/get-atmacs-w.log 2>&1

15 22 * * * /home/hgs/SMHI/auto-tgg > /home/hgs/SMHI/auto-tgg.log 2>&1

00 08 2 * * /home/hgs/TD/monthly-600s -NP R > /home/hgs/TD/logs/monthly-600s.log 2>&1

10 03 * * * /home/hgs/TD/tilt-control-monitor -pwr -PZ > /home/hgs/TD/logs/tilt-control-monitor.log 2>&1

12 03 * * * /home/hgs/TD/tilt-control-monitor -bal -PZ >> /home/hgs/TD/logs/tilt-control-monitor.log 2>&1

10 08 * * * /home/hgs/TD/longt-gbres > /home/hgs/TD/logs/longt-gbres.log 2>&1

10 22 * * 5 /home/hgs/TD/ts4openend GBR-PMR-ATR-TG-TAXP > /home/hgs/TD/logs/ts4openend.log 2>&1

00 07 * * * /home/hgs/wx/WELL/plot-rr2wl-lastmonth > /home/hgs/wx/WELL/plot-rr2wl-lastmonth.log 2>&1

35 08 * * * /home/hgs/SMHI/plot-tgg > /home/hgs/SMHI/plot-tgg.log 2>&1

58 23 * * * /home/hgs/Seism/USGS/ens-daily-upd > /home/hgs/TD/logs/ens-daily-upd.log 2>&1

58 11 * * * /home/hgs/Seism/USGS/ens-daily-upd > /home/hgs/TD/logs/ens-daily-upd.log 2>&1

#00 08 * * * /home/hgs/TD/daily-fo-pdg.sh -r -25/55/5 -e Coquimbo -A > /home/hgs/TD/logs/daily-fo-pdg.log 2>&1

10 08 * * * /home/hgs/TD/daily-fo-pdg.sh -r AUTO -floor -25 -d -30 -FW -e last-30-days -A -remember > /home/hgs/TD/logs/daily-rolling-fo-pdg.log 2>&1

24 * * * * /home/hgs/perlproc/monitor-cronstart > /home/hgs/TD/logs/monitor-cronstart.log 2>&1

54 * * * * /home/hgs/perlproc/monitor-cronstart > /home/hgs/TD/logs/monitor-cronstart.log 2>&1

in ~/TD/MEMS

$RJD:$H.msp

$YYYY-$MM-$DD-memsp-vs-time.grd

memsp-vs-time.grd

memsp-vs-time.ps

in ~/www/SCG/MEMS

2012-08-01-memsp-vs-time-tn.png

2012-09-01-memsp-vs-time.png

etc.

Programs:To repair missing bits, not including today (happens indeed rarely):

~/TD/daily-mems

~/TD/MEMS/memsp-vs-time

cd ~/TDRepair missing bits of today, if memsp-vs-time -S did not close all gaps: Handwork!

daily-mems -M MEMS [-n #ndays] #date

daily-mems -R MEMS [-n #ndays] #date

cd MEMS

memsp-vs-time -D -OT ~/www/SCG/MEMS -d #YYYY-MM-01 31

or

memsp-vs-time -S -D -OT ~/www/SCG/MEMS -d #YYYY-MM-01 31

if the files of today haven't been sent from Elder to Holt

or

memsp-vs-time -D -OT ~/www/SCG/MEMS -A #RJD -d #YYYY-MM-01 31

if the files of the day (RJD) are not found on Elder but on Holt

cd ~/TD

scpb -R G1121230.054

daily-resid -B F -T garb RAW_o054/G1121230.054

Check whether erroneous files are in the way:

ls MEMS/`jdc -di 2012-12-30`*.msp # remove what's necessary

Either:

daily-mems -R MEMS -n 2 2012-12-30 # for yesterday and today

or:

daily-mems -R MEMS -n 1 2012-12-31 # for today

then recommended:

actual-memsp-vs-time

or:

cd MEMS

memsp-vs-time -D -OT ~/www/SCG/MEMS -A 56291 -d 2012-12-01 31

Finally:

cd ~/TD

rm -f o/G1_garb_121230-1s.mc

|

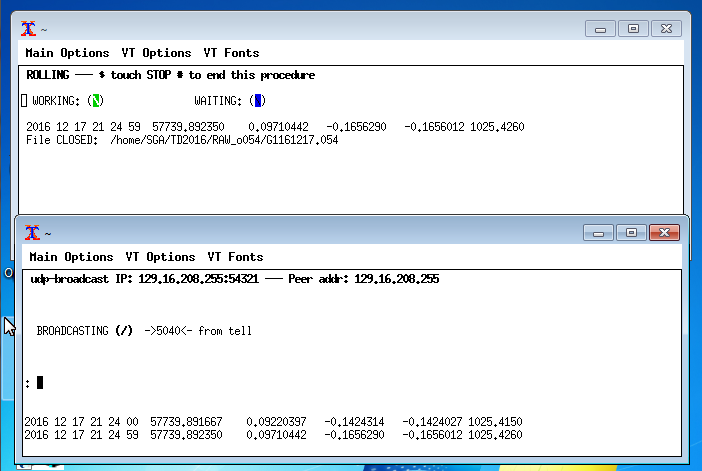

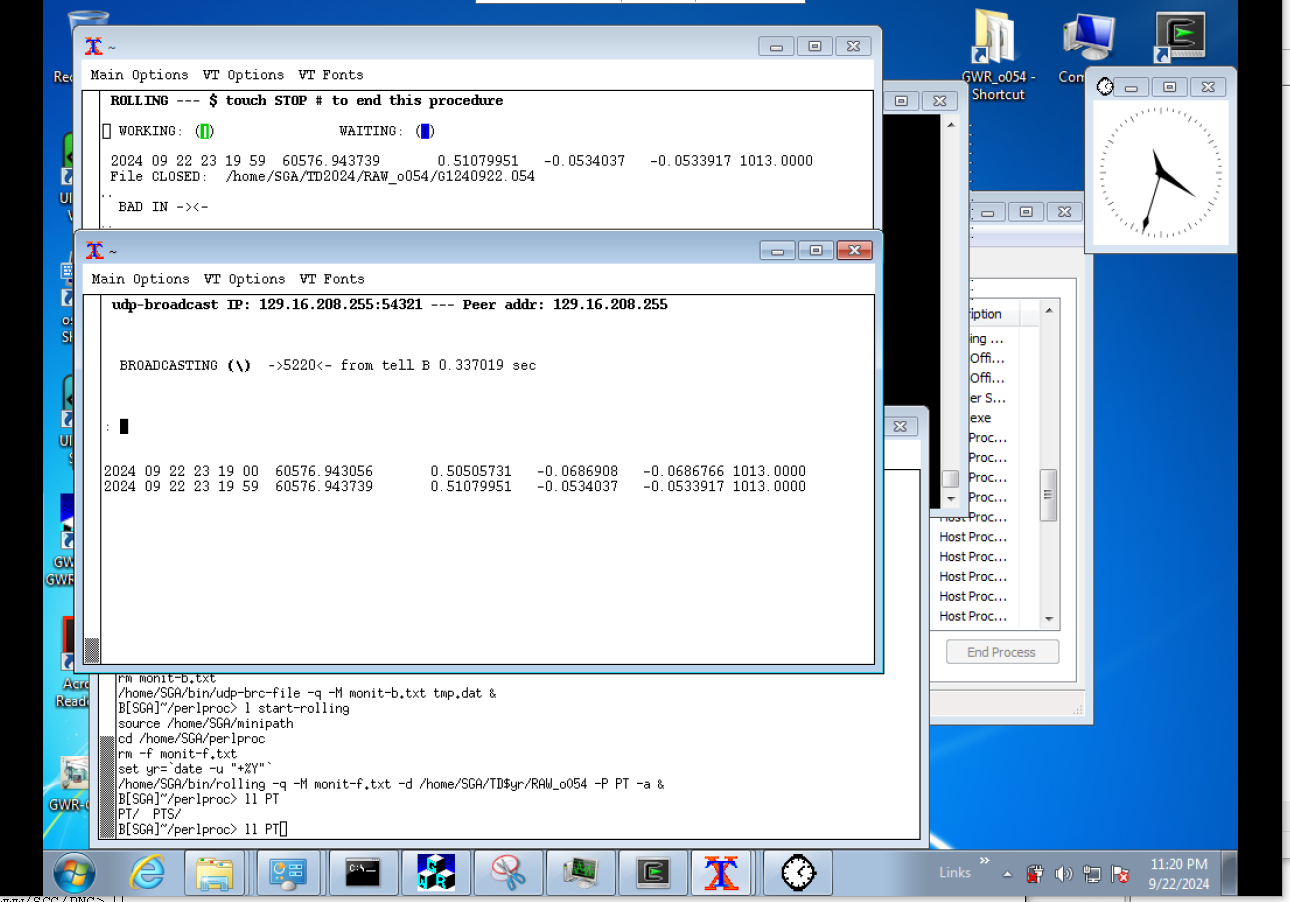

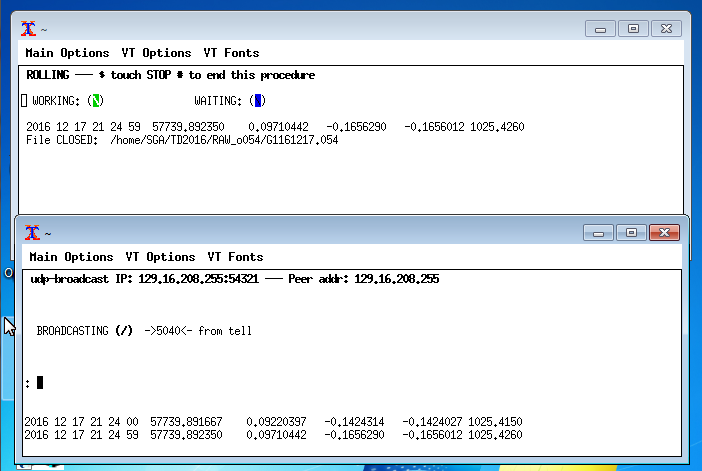

| Figure above: This is the normal look of the

broadcast processes. The heartbeat marks are symbols,

eventually with a coloured symbol like (/);

they change angle every minute. Samples of the

broadcast record are also shown. If the windows appear dead

or incomplete, follow the guidelines below. |

Use the console window, issue

jobsand kill the XWin job, usually

kill %1This will remove the windows. Exit the console window too.

xterm, bash, tcsh, perl

. sx4. Start the broadcast processes manually

^Z # means: <Ctrl>-Z

bg %1

. st

. st

. st

In the first of the xterms, enter

tcsh

cd bctemp

/home/SGA/bin/rolling -q -M monit-f.txt -d /home/SGA/TDYEAR/RAW_o054 -P PT -a &

In the second xterm

tcsh

cd bctemp

/home/SGA/bin/udp-brc-file -q -M monit-b.txt tmp.dat &